Extracting Data from Firebase Analytics without Big Query

When getting started with Firebase Analytics, it can be confusing to figure out how to access the data from your analytics dashboard programmatically. A lot of the "official" guidance suggests using Google Cloud's BigQuery as a solution, but this doesn't necessarily make sense for smaller projects. In this article we'll be taking a look at an easier way to run ad hoc queries against your Firebase Analytics data.

Introduction

Firebase Analytics is a popular monitoring platform which lets businesses aggregate data on activity generated by their products and services. It is particularly popular for mobile applications, offering tracking of up to 500 unique events at the same time.

Google Analytics, Firebase Analytics: What's the Difference?

There is sometimes confusion over what exactly the difference is between Google Analytics and Firebase Analytics. The latter is often referred to as Google Analytics for Firebase. In the past, these two were offered viewed by developers as totally separate entities.

As of today there is no difference between them because Google Analytics powers the analytics dashboard that appears in the Firebase console. Indeed, any Firebase Analytics project will require a Google Analytics property and any event data shown in the corresponding Firebase project dashboard will have been extracted from that Google property.

Firebase Events

Firebase events let us record snapshots of activity and view them in a dashboard. By default, there a number of automatically collected events. A sample of these is shown below:

| Name | Description | Parameters |

|---|---|---|

| app_remove | User uninstalls application | none |

| app_store_subscription_renew | User renews paid subscription | product_id, price, value |

| os_update | User updates operating system | previous_os_version |

| screen_view | When transition between screens occurs | firebase_screen, engagement_time_msec |

Querying Event Data

Okay, so we have all these events and can also collect our own event data if we want, but how do we access all this data programmatically?

BigQuery

Google BigQuery always comes up as a suggestion for extracting data from Firebase Analytics. It can handle enormous volumes of data and offers the option to query the data using SQL or export it to third party services.

The downside of BigQuery is that it you will not be able to access all your historic data unless you integrate it at the very start of your analytics project's lifetime. It could also be seen as unnecessary to use a tool like BigQuery on a dataset that's unlikely ever to grow to "big data" proportions.

Analytics Data API

Remember we said Google Analytics and Firebase Analytics are effectively the same? Well, this is good news, because it means we can use the Analytics Data API to access our analytics data programmatically. What's great about this is that it can be used in multiple programming languages (Java, Python, PHP, .NET, Node.js at the time of writing).

Using the Analytics Client

The first thing we need to do is set up a Google Cloud service account, which is a type of non-human Google Cloud account with permission to access certain Google Cloud APIs.

I'm going to skip over the configuration of this account because the link above explains it really well, but the key thing about this process is that it will give you access to a service account key JSON file like the one below:

// credentials.json

{

"type": "service_account",

"project_id": "your-id",

"private_key_id": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"private_key": "-----BEGIN PRIVATE KEY-----\nYOUR_KEY_CONTENT_HERE\n-----END PRIVATE KEY-----\n",

"client_email": "your-email-here",

"client_id": "client-id-here",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "client-cert-url-here"

}

At this point, we have our credentials. We'll revisit these later. Now it's time to write some queries.

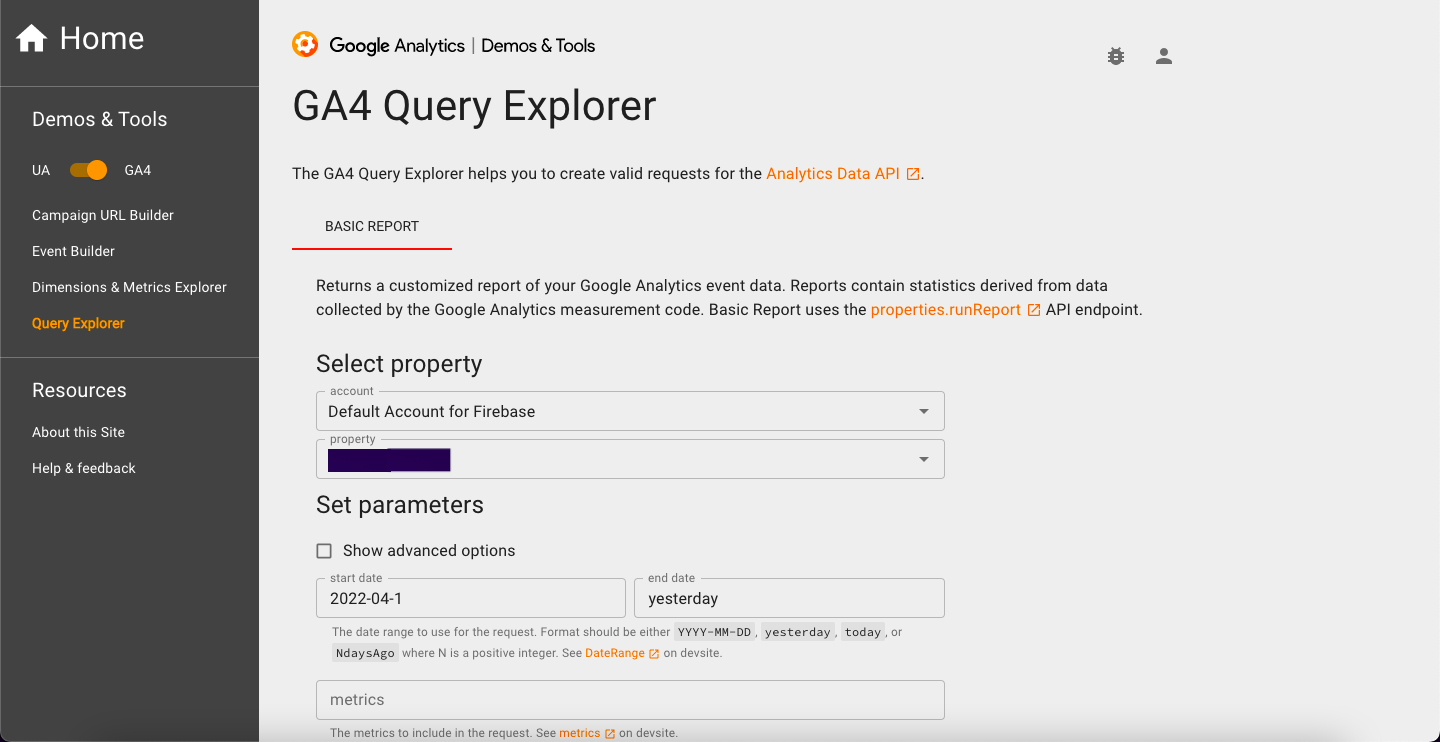

Google Analytics 4 Query Explorer

The GA4 Query Explorer tool is the best way to explore the data in your Analytics project.

This tool makes it a lot easier to generate the JSON inputs required by the Analytics Data API.

Using the tool is quite intuitive if you understand the event data your project is storing.

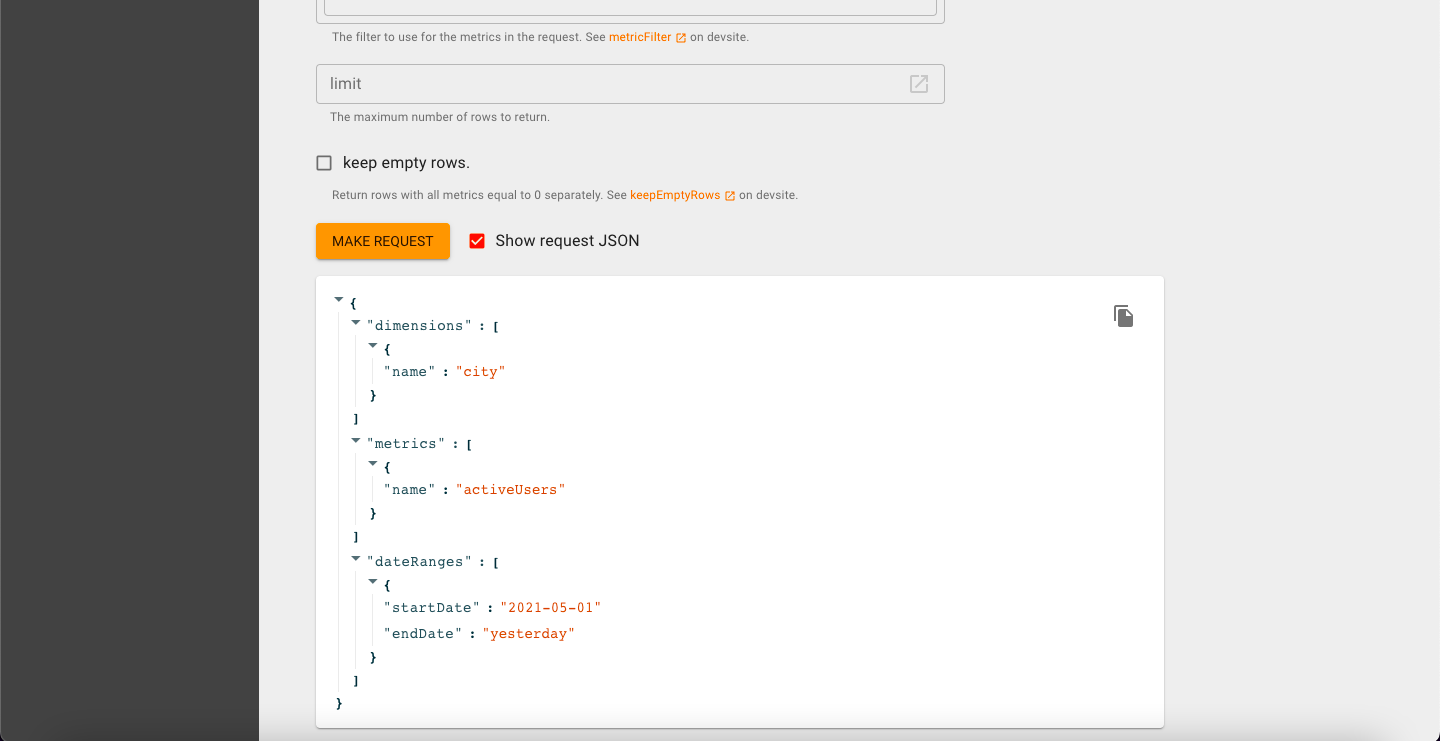

For example, let's try to get back active users starting from the beginning of May 2021, showing their city:

If we click the "copy" icon, we can access the JSON input and use it to make the same query programmatically through the Data API. Let's take a look at how that can be done.

Back to the Data API

At this point, we can start writing some code. Let's use the Node.js client library and try to run a query using the JSON input we just copied.

First, initialise the Node.js project:

npm init

npm i --save @google-analytics/data chalk

npm i -D prettier

Your package.json should now look something like this:

// package.json

{

"name": "querying-firebase-analytics",

"version": "1.0.0",

"description": "A very simple project to test out the Google Analytics Data API",

"main": "analytics-query.js",

"module": "true",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1",

"prettify": "prettier -w -u \"{,!(node_modules|.next)/**/}*.{js,jsx,ts,tsx}\""

},

"keywords": [

"node.js",

"firebase"

],

"author": "Your Name",

"license": "MIT",

"dependencies": {

"@google-analytics/data": "^3.0.0",

"chalk": "^5.0.1"

},

"devDependencies": {

"prettier": "^2.7.1"

}

}

Having set up the project, we can use the following highly simplified example to run a query and display the query output:

// analytics-query.js

/** Import Google Analytics Data API Library */

import { BetaAnalyticsDataClient } from "@google-analytics/data";

import chalk from "chalk";

/** This contructor will configure credentials using the GOOGLE_APPLICATION_CREDENTIALS environmental variable.*/

const analyticsDataClient = new BetaAnalyticsDataClient();

/** Run report with params */

async function runAnalyticsReport(params) {

const [response] = await analyticsDataClient.runReport({

property: `properties/${process.env.GOOGLE_ANALYTICS_PROPERTY_ID}`,

...params,

});

console.log("Printing report... ");

printRows(response);

return response;

}

function printRows({ rows, metricHeaders }) {

rows.forEach((row) => {

console.log(

chalk.blue("---- First dimension value ----"),

row.dimensionValues[0].value

);

printMetricHeadersForRow(metricHeaders, row);

});

}

function printMetricHeadersForRow(metricHeaders, row) {

metricHeaders.map((header, i) => {

console.log(

chalk.green(`${header.name} ----> ${row.metricValues[i].value}`)

);

return header;

});

}

/** Active users by location */

runAnalyticsReport({

dateRanges: [

{

startDate: "2021-05-01",

endDate: "today",

},

],

dimensions: [

{

name: "city",

},

],

metrics: [

{

name: "activeUsers",

},

],

});

There are three points to note about the snippet above. First, note the use of the GOOGLE_ANALYTICS_PROPERTY_ID environment variable which tells the client library which project we want to query. Second, notice that we have passed the JSON input to the runAnalyticsReport method, which should let us see the data when we execute the file. Third, note that we are returning the response, which will let us process the queried data downstream, but also note that the logging will let us manually inspect data on an ad hoc basis.

Note In addition to the GOOGLE_ANALYTICS_PROPERTY_ID environment variable, we also need a GOOGLE_APPLICATION_CREDENTIALS environment variable so that the client library can grant permission to carry out the request.

To keep these environment variables easy to manage, let's write a shell script to add these variables to the environment before executing the JS file.

Note Doing this through a .env file would be even easier and is the way I would do this on a real-world project.

The script below is just a convenience wrapper for running node analytics-query.js while ensuring that the environment variables are properly set.

# execute.sh

GOOGLE_CLOUD_CREDENTIALS="credentials.json"

GA_PROPERTY_ID=123456789 # Change to yours

DIR=$(pwd)

CREDENTIALS_PATH="${DIR}/${GOOGLE_CLOUD_CREDENTIALS}"

export GOOGLE_APPLICATION_CREDENTIALS=${CREDENTIALS_PATH}

export GOOGLE_ANALYTICS_PROPERTY_ID=${GA_PROPERTY_ID}

node analytics-query.js

Let's not forget to make this script executable:

chmod +x execute.sh

Now it's time to test out the code:

./execute.sh

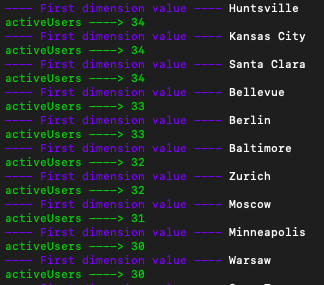

After running this, the output in the Terminal should look something like this:

Summary

This article was a simple guide to extracting and querying Firebase Analytics data without relying on BigQuery. The Analytics Data API is a great solution for this and can be used in several programming languages, making it ideal for ad hoc querying and programmatic access.

Related Articles

Using AWS DMS to Depersonalise Sensitive Data

The AWS Database Migration Service is usually associated with the transfer…

September 12th, 2025

A Custom Jest Environment for Integration Tests

When writing integration tests in Jest, we usually need to connect to out…

May 15th, 2025

How to Import AWS Lambda Functions to Terraform

Sometimes, when working at a small scale or in a team that is new to the…

February 27th, 2023