Using Jemalloc to Reduce Rails Memory Use on Amazon Linux

We were facing a major memory issue with our production Ruby on Rails system running in an auto-scaling group of EC2 Amazon Linux instances. The system was a monolithic GraphQL API designed to handle routine business logic and consume various cloud services.

Despite the relatively low levels of traffic the API was receiving, its Puma application servers were consuming memory resources at an alarmingly high rate.

Even during lulls in user traffic, our system's memory consumption continued rising, reaching levels equating to several GB, which was very worrying from both a financial and an operational perspective.

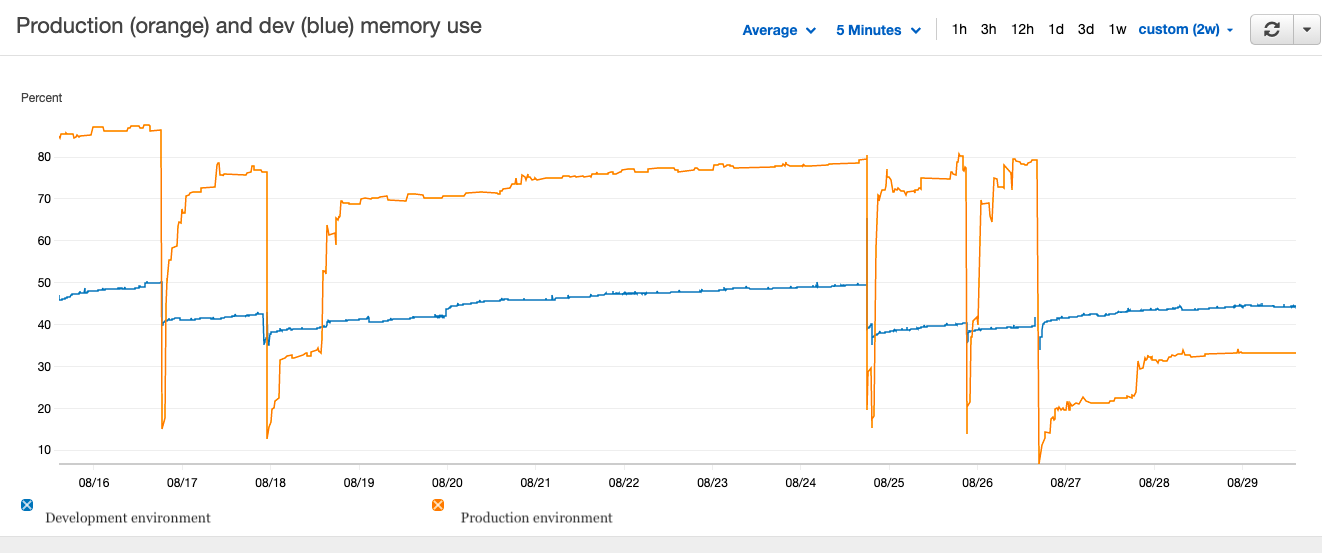

Figure 1 shows memory consumption in our production and development environments. Although it was quite low in development, the production environment was using a lot of memory (the low points came during deployments).

Initial Investigation

Very quickly we started doubting the quality of our own work:

- Were there memory leaks in our code?

- Were there memory leaks in one of our gems i.e. dependencies?

- Had we forgotten some basic optimisations e.g. database indexing, server-side pagination, rate limiting?

- Or could someone be launching a DDoS attack against us?

Luckily we had configured detailed monitoring through CloudWatch. This enabled us to see exactly how the patterns of memory consumption changed from minute to minute.

In spite of all the great insights we had through CloudWatch, we were no closer to knowing why memory use was so high. To that end, we tested out a few memory profiling solutions for Ruby.

The excellent memory_profiler gem was very useful, but only insofar as it confirmed that there was no single GraphQL query or mutation that was particularly memory-intensive.

Example of memory_profiler gem:

require 'memory_profiler'

report = MemoryProfiler.report do

# Ruby code here

end

report.pretty_print

Having come up with nothing so far, we decided to dig a little deeper.

Side Note We did find out that one endpoint - for geospatial data querying - was extremely CPU-hungry, but no single block of code seemed to be responsible for driving memory up so high.

Attempted "Quick Fixes"

We tried a few "quick fixes" in our dev environment, including automatic reboots and automatic re-deployments.

Scheduling automatic reboots of our instances every few hours did indeed bring memory consumption down, but it also resulted in downtime, so we quickly abandoned this option.

Our next "quick fix" consisted of setting up an EventBridge cron job to rerun our CICD pipeline through Code Pipeline every few hours, but this too was deemed impractical for use in production.

Most of our pre-emptive "quick fixes" were ruled out for the same set of reasons:

- Addition of unnecessary extra cost

- Potential for downtime

- If new (genuine) memory leaks were to arise, our temporary solution might disguise them or fill us with false optimism regarding our code's efficiency

- A vague feeling of it not being "best practice"

It was clear that we needed a more sustainable solution.

More Research

Mike Perham, the author and maintainer of the Sidekiq job scheduler, explains that memory bloat is very common in multithreaded Rails workloads because of the funadamental incompatibility of the memory allocation patterns used by the Ruby interpreter, MRI, and that used on most distributions of Linux, GNU glibc.

Perham states that making the switch to jemalloc, a different memory allocator, can reduce memory consumption fourfold, but concedes that it may not be possible on Alpine Linux.

Rails performance expert Nate Berkopec goes even deeper on Ruby memory issues, allaying our earlier fears of a memory leak by demonstrating that memory growth tends to be linear when caused by a memory leak, a trend decidedly at odds with the rather more logarithmic trend exhibited by our own memory growth.

Because Ruby processes cannot move objects around in memory, it is normal for there to be a certain level of fragmentation, which is ultimately what is responsible for Ruby's high memory consumption. This instrinsic weakness of Ruby is exacerbated by the multiple layers of abstraction separating memory from the typical Ruby programmer, thereby obscuring the issue.

In essence, says Berkopec, memory allocation is a bin packing problem, in the sense that it involves trying to fit many different sized objects into many different sized spaces in memory in such a way as the objects occupy as little space as possible. At the end of the article, the author recommends jemalloc as one of the three best ways to reduce memory usage of Ruby processes.

At this point we were convinced that jemalloc was the solution to our problems; what we needed now was a way to implement it on our Linux servers.

Implementing Jemalloc

To reap all the benefits of jemalloc, we had to make sure that all our production workloads would run on an instance with Ruby configured to use jemalloc as its memory allocator.

This meant launching a new EC2 instance and executing the script below after connecting to the instance over SSH (credit to Matthew Lein for this). Please note that the version of Ruby will need to match yours (if you are unsure about how to install Ruby on Amazon Linux 2, there are lots of helpful guides like this one).

Installing jemalloc on Amazon Linux 2:

sudo su

sudo amazon-linux-extras install -y epel

sudo yum install -y jemalloc-devel

wget https://cache.ruby-lang.org/pub/ruby/2.7/ruby-2.7.4.tar.gz

tar xvzf ruby-2.7.4.tar.gz

cd ruby-2.7.4

LDFLAGS=-L/opt/rubies/ruby-2.7.4/lib CPPFLAGS=-I/opt/rubies/ruby-2.7.4/include ./configure --prefix=/opt/rubies/ruby-2.7.4 --with-jemalloc --disable-install-doc

make

sudo make install

This script does the following:

- Gives you superuser privileges

- Enables the epel repository, making epel packages (like jemalloc) available from the standard yum commands

- Installs jemalloc using yum

- Gets a zipped up archive of the requested Ruby version

- Unzips the archive tar file

- Changes directory to the newly installed Ruby version

- Configures Ruby to run with jemalloc

- Compiles the newly configured Ruby with make (you may see your CPU spike significantly after running this)

- Starts the newly configured Ruby

After this script has finished running, you can run ruby -r rbconfig -e "puts RbConfig::CONFIG['MAINLIBS']" to check whether jemalloc is now set up with the Ruby version on your instance.

As long as the reponse contains -ljemalloc, you can safely assume that it has worked.

Once you have verified that the Ruby version is using jemalloc, you will need to create a new Amazon Machine Image (AMI) from the instance and configure your production workloads to use that image on all EC2 instances.

Naturally this process will be a little different for containerised workloads.

Quick Point This will work both for EC2 workloads running independently as well those provisioned through a custom CloudFormation template, Terraform stack or Cloud Develoment Kit (CDK) script, or whatever other "infrastructure as code" solution you may be using. If you are using AWS Elastic Beanstalk, you will need to make sure your environment is set to use your newly created jemalloc-powered AMI.

Results

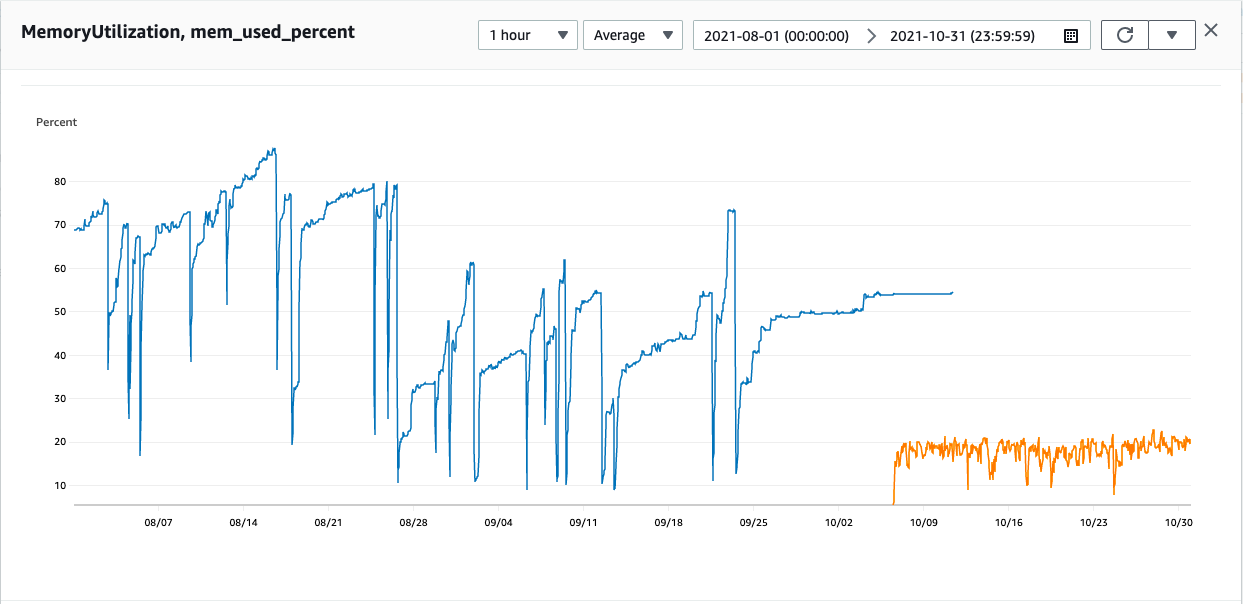

After implementing jemalloc on our machine image and relaunching our servers, the results were very encouraging.

Memory use had dropped by around 75%, equating to huge cost savings when running at scale. Although cost would not necessarily scale linearly with memory usage, the potential savings were still significant.

Where before there had been doubt, there was now confidence.

We are definitely pleased with what jemalloc has done for our cloud outlook and we would definitely recommend it to other people who find themselves in the same situation.

Related Articles

Using AWS DMS to Depersonalise Sensitive Data

The AWS Database Migration Service is usually associated with the transfer…

September 12th, 2025

A Custom Jest Environment for Integration Tests

When writing integration tests in Jest, we usually need to connect to out…

May 15th, 2025

How to Import AWS Lambda Functions to Terraform

Sometimes, when working at a small scale or in a team that is new to the…

February 27th, 2023