Using the AWS Database Migration Service to Clone and Depersonalise Sensitive Data

The AWS Database Migration Service is usually associated with the transfer of databases from on-premise to the cloud or from one cloud provider to another, but an upgrade from early 2025 means it's now also possible to use it for masking sensitive data. This has some useful applications when it comes to building ISO-compliant solutions that adhere to data privacy regulations like GDPR.

In this article we're going to look at some of the core concepts behind DMS and how we can bring these together to handle the redaction of sensitive data during a migration task.

Note To keep the content accessible to everyone, this article is not going to document the steps required to provision DMS resources in the AWS management console as this would have a signficant expense attached.

DMS Core Concepts

There are three main concepts to understand before getting started with DMS:

- Replication Instances

- Endpoints

- Replication Tasks

Replication Instances

A replication instance is essentially a managed EC2 instance which provides the memory, compute and networking resources required to run migration jobs. Although officially an EC2 instance, replication instances are abstracted away from the EC2 service and won't be retrievable via the EC2 UI, CLI or API.

Like regular EC2, DMS supports a number of different instance types such as dms.t2 (general purpose, burstable), dms.c5 (compute-optimized), dms.r5 (memory-optimized); the choice of instance type will vary depending on the needs of the workload you're running.

The role of a replication instance is to fetch data from the source endpoint, apply any specified transformations, and load it into the target endpoint.

Endpoints

An endpoint is a set of connection details for a particular data store. This includes certain key pieces of information, most notably:

- Database type (MySQL, PostgreSQL, S3)

- Connection parameters (hostname, port, username, password)

- Further configuration based on the database type (encryption, schema selection for MySQL, database name for PostgreSQL)

A source endpoint is a database that data gets loaded from. A target endpoint is a database that data is migrated to.

Replication Tasks

A replication task specifies how data will be moved between the source endpoint and the target endpoint. The task will control the overall migration process, including:

- Migration type. This can be "full load", where all the data is migrated once, an ongoing replication using Change Data Capture, or a combination of both.

- Table mappings. These are rules that determine which data is migrated. We have the option to define the schemas, tables and columns we wish to migrate. For MySQL databases the schema name will typically be the name of the database to migrate, while for PostgreSQL databases the source endpoint will typically already point at a specific database.

- Transformation rules. These let us modify schema names, change the data types of columns, and also apply masking rules to sensitive data fields.

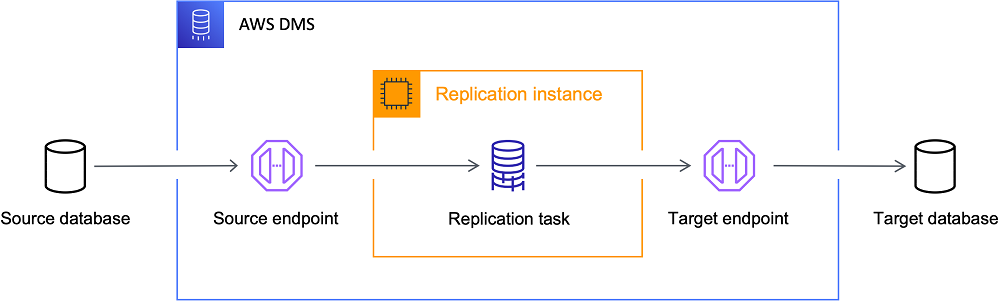

Sample Architecture

The following diagram helps visualise the three core components of DMS and how they interact to let us move data from one network location to another.

Data Masking

Data masking rules can be used to hide certain pieces of sensitive or personal data. There are several scenarios where this might be useful:

- Anonymise Personally Identifiable Information (PII) before moving data to dev/test environments

- Mask health data or financial information before migrating to data analytics systems or non-prod environments

- Ensure compliance with relevant legislation (PCI, GDPR, PCI DSS)

Transformation Rules

Here's an example of a set of transformation rules that would let us select the users table of a hypothetical PostgreSQL database and apply masking rules to some columns that are known to contain sensitive data (email, full_name and phone_number):

{

"rules": [

{

"rule-type": "selection",

"rule-id": "1",

"rule-name": "include-users",

"object-locator": {

"schema-name": "public",

"table-name": "users"

},

"rule-action": "include"

},

{

"rule-type": "transformation",

"rule-id": "2",

"rule-name": "hash-mask-email",

"rule-target": "column",

"object-locator": {

"schema-name": "public",

"table-name": "users",

"column-name": "email"

},

"rule-action": "data-masking-hash-mask"

},

{

"rule-type": "transformation",

"rule-id": "3",

"rule-name": "hash-mask-fullname",

"rule-target": "column",

"object-locator": {

"schema-name": "public",

"table-name": "users",

"column-name": "full_name"

},

"rule-action": "data-masking-hash-mask"

},

{

"rule-type": "transformation",

"rule-id": "4",

"rule-name": "randomize-phone",

"rule-target": "column",

"object-locator": {

"schema-name": "public",

"table-name": "users",

"column-name": "phone_number"

},

"rule-action": "data-masking-digits-randomize"

}

]

}

Selecting a Sample of the Data

Let's say we're only interested in migrating a subset of our data and don't need to select the full contents of our users table. Here's how we can add a filter rule to do just that. In this example we've decided to select only users from May, 2025:

{

"rules": [

{

"rule-type": "selection",

"rule-id": "1",

"rule-name": "include-users-may-2025",

"object-locator": {

"schema-name": "public",

"table-name": "users"

},

"rule-action": "include",

"filters": [

{

"filter-type": "source",

"column-name": "created_at",

"filter-conditions": [

{

"filter-operator": "between",

"start-value": "2025-05-01",

"end-value": "2025-05-31 23:59:59"

}

]

}

]

},

{

"rule-type": "transformation",

"rule-id": "2",

"rule-name": "hash-mask-email",

"rule-target": "column",

"object-locator": {

"schema-name": "public",

"table-name": "users",

"column-name": "email"

},

"rule-action": "data-masking-hash-mask"

},

{

"rule-type": "transformation",

"rule-id": "3",

"rule-name": "hash-mask-fullname",

"rule-target": "column",

"object-locator": {

"schema-name": "public",

"table-name": "users",

"column-name": "full_name"

},

"rule-action": "data-masking-hash-mask"

},

{

"rule-type": "transformation",

"rule-id": "4",

"rule-name": "randomize-phone",

"rule-target": "column",

"object-locator": {

"schema-name": "public",

"table-name": "users",

"column-name": "phone_number"

},

"rule-action": "data-masking-digits-randomize"

}

]

}

MySQL and Postgres Compatibility

One point worth noting is that the three built-in DMS masking transforms have limited compatibility with MySQL and PostgreSQL data types.

For example, masking transforms can't currently be used to redact PostgreSQL text or jsonb data types or blob data types. The full compatibility listing can be found in the documentation here.

Summary

This article was a brief overview of the core components of AWS DMS and how the service can be used to depersonalise sensitive data as part of a compliant, scalable cloud solution.

Related Articles

How to Import AWS Lambda Functions to Terraform

Sometimes, when working at a small scale or in a team that is new to the…

February 27th, 2023

A CI/CD Pipeline using CodeBuild, RDS and Route53

CodePipeline is a managed product that can be used to create an automated…

August 9th, 2022

Easier Cron Jobs on AWS

Scheduling repetitive recurring tasks through code is a very common…

April 25th, 2022